Pixotope

The revolution in virtual production systems

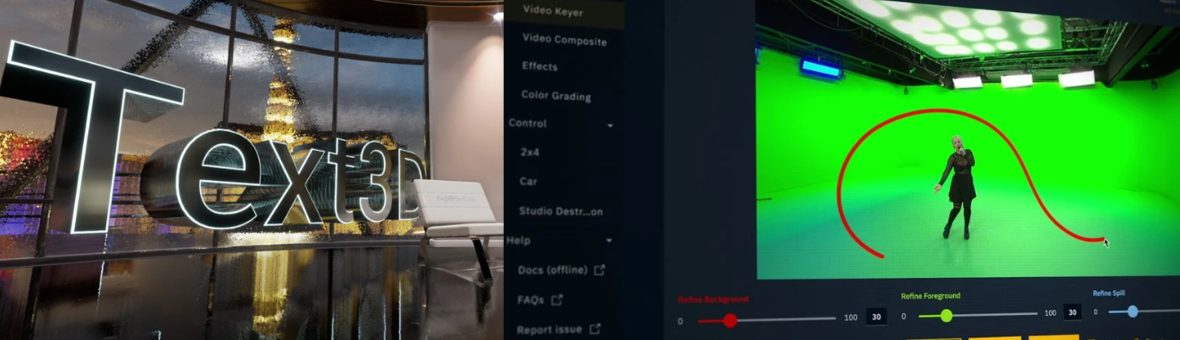

Pixotope is an open software-based solution for the rapid creation of virtual studios, augmented reality (AR), extended reality (XR) and on-air graphics.

Pixotope is an open software-based solution for the rapid creation of virtual studios, augmented reality (AR), extended reality (XR) and on-air graphics.

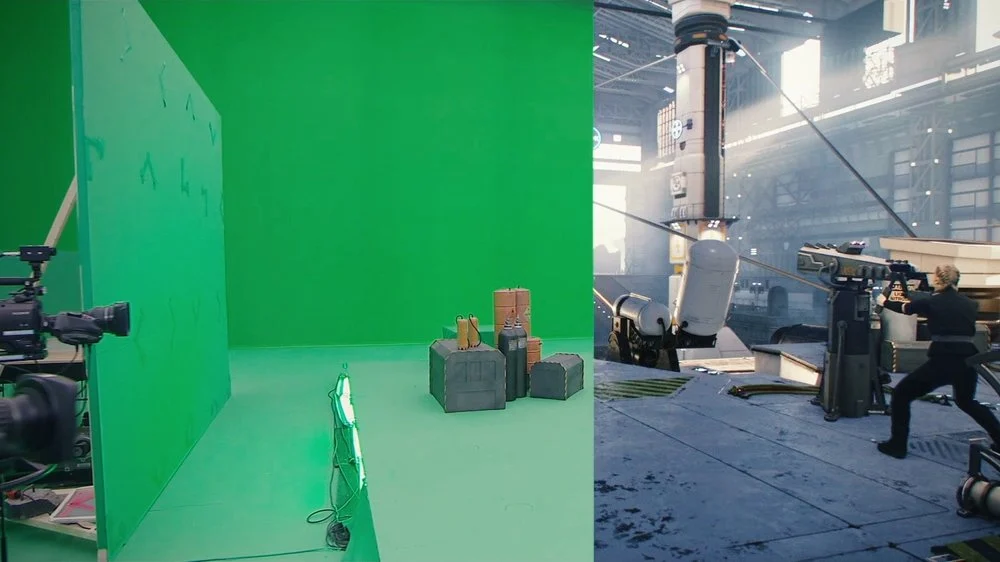

Pixotope uses Epic Games’ Unreal Engine to produce photorealistic renderings in real time. The combination of Unreal Engine and Pixotope allows designers to quickly create virtual sets, virtual environments and augmented content, with terrain and foliage, with particle systems (rain, smoke, fire, hair, cloth, explosions, etc.) with simulated cameras and lens properties (lens distortion, depth of field, chromatic aberration).

Pixotope is designed for on-air use allowing for rapid design and delivery of virtual, augmented or mixed reality content. It allows users to configure, create and control any type of virtual production from a single user interface.

Whether you choose to create content using Pixotope’s unique automatic generation tool or create your own content from scratch using its specialized WYSIWYG editing tools, Pixotope harnesses the real-time power of the Unreal Engine to create anything, from a single camera project, to a multi-camera live production in a virtual studio, all in cinematic quality.

An extremely attractive subscription model ensures that Pixotope can be easily deployed within an organization and tailored to the specific requirements of each project.